“desolate city”

Apparently there’s an alternative to VQGAN+CLIP called Zoetrope 5.

I haven’t tried it yet but it has a whole heap of sliders and options in addition to the usual prompt-based systems. I’ll look into it more when I wake up.

Just tried this out, hard to say for sure if it’s “better” or what, but I liked the one I got:

“bart simpson marge simpson edvard munch the scream” at 1 weight and “high definition” at .3 weight. It almost looks like something! I didn’t mess with any of the other settings.

master chief in the style of lisa frank

how did you do that?

i cut an image into strips and did each strip w/ a different prompt and then stitched them back together

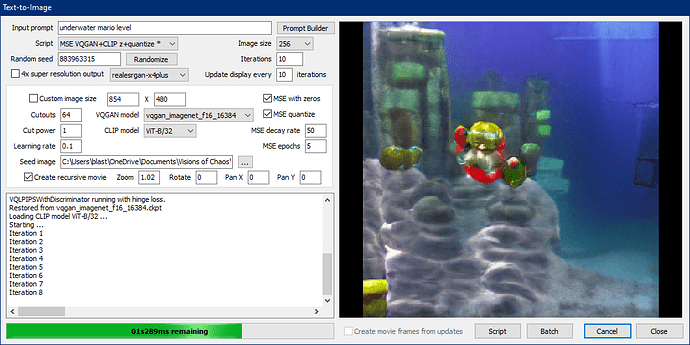

im using the software visions of chaos and the MSE VQGAN+CLIP z+quantize script

Wow. Did those have a base image? Did you do something with a video editor? They had an interesting flow just beyond the iteration by the AI. How did you achieve that effect?

I still haven’t fed VQGAN+CLIP any base images to work from. I can’t quite figure out how to feed images to the Collab Notebook even though I see the prompt for it. Maybe it expects a net-accessible URL as the input(?). I simply haven’t figured that feature out yet. As such, if I want to mess with base images my only real recourse at this point is to burn up some of my very limited server time on Deep Dream.

I would absolutely recommend giving Visions of Chaos a try if your hardware is capable of running it. The main relevant pieces of functionality here is called recursive movies

it takes the output of the last set of iterations as a seed image for the next set of iterations (by default it’s 10)

you can have it offset the image by a few pixels vertically or horizontally, zoom in slightly, or rotate the image and it will continue iterating with the same seed on a slightly different image.

one of the cool things about it is you can change the prompt after a set of iterations to something different, or you can adjust the recursive movie parameters (changing zoom speed or rotation etc)

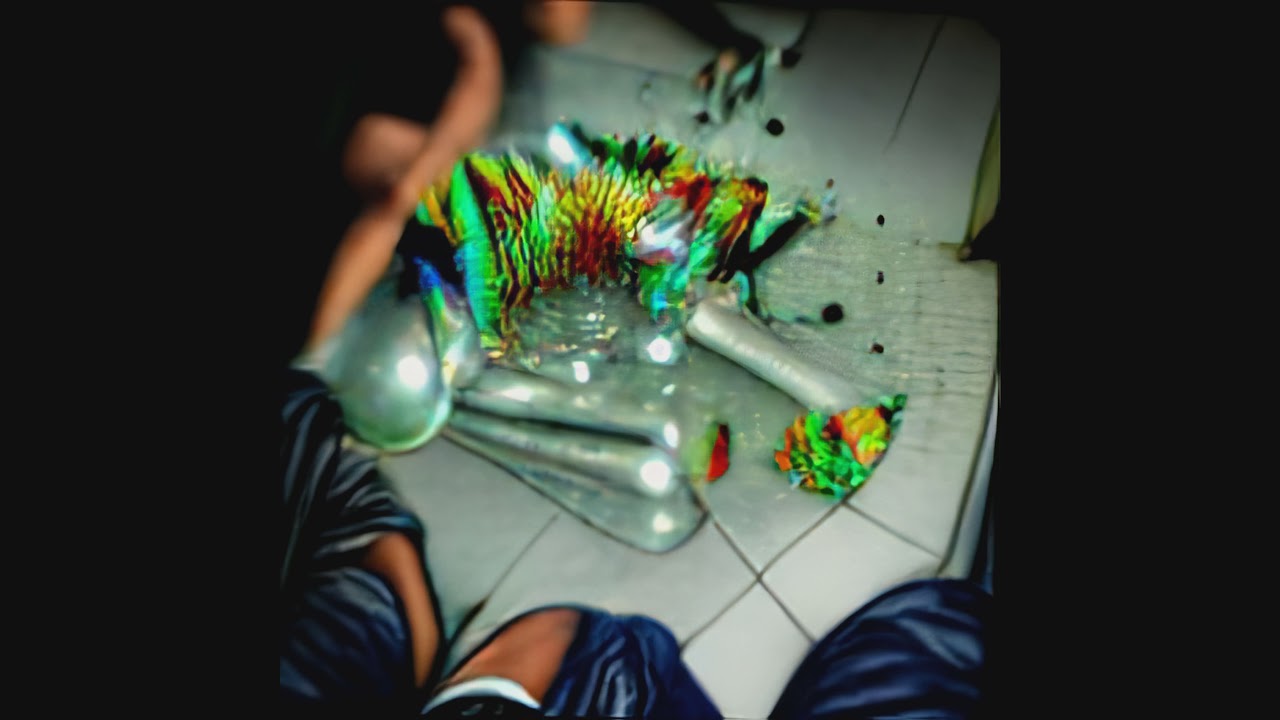

heres a seed image of a banana being turned into apple

and heres that image trying to become banana again

this one i was messing with the speed of the rotation

i use several other tools for processing as well

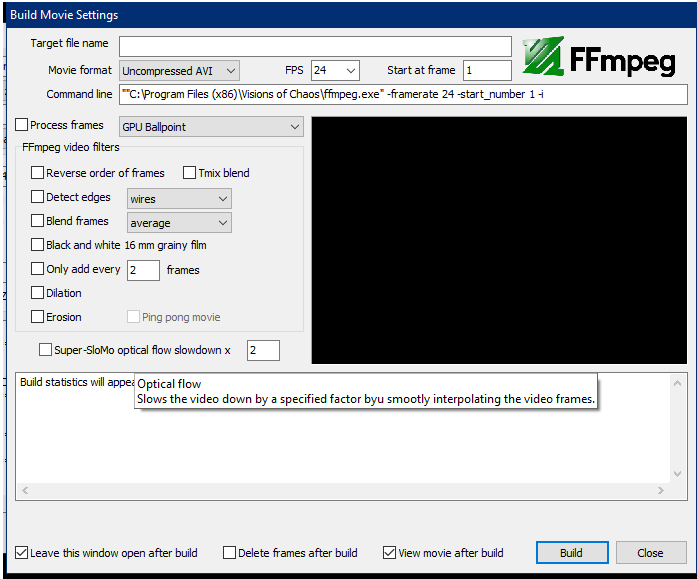

this is the built in video compiler, sometimes i try the optical flow setting if i havent made enough frames for a video that is more than 2-3 seconds worth of frames

i also use topaz video enhance ai for upscaling the final product for now until i try out the new built in upscaling

Well, hot damn. It doeth appear that my GPU is more than capable of running this program based on their stated criteria. I will definitely give this a whirl. Be nice to run it on my own hardware so I don’t have to wait for Google or Deep Dream to give me my GPU time allowance.

here’s a good sample of the kind of potential this software has, once i can figure out how to get it to run on my VM i cant wait to keep experimenting w/ it

cool

https://www.reddit.com/r/deepdream/comments/plj3m7/made_with_custom_deepdream_implementation_in/

i forgot about this thread but i meant to post a picture of my “new” (now several weeks old) stream setup (which i spent way too much time on for someone who hasn’t streamed in like a year) which i generated using that vqgan + clip web vm:

would love to use this to make UI for some games in the future.

I did something similar with my unraid banner, (its a bunch of moomin like entities), maybe i should try generating some meaty chunks 4 unraid

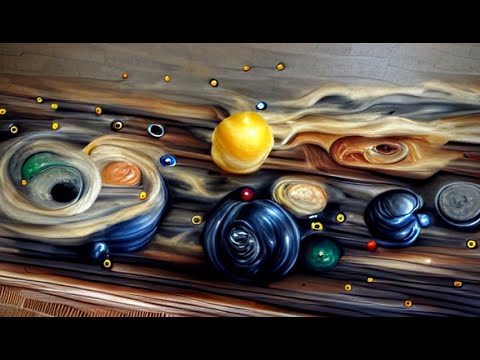

heres a music video created with visions of chaos, yet again I think the concept is a lot more interesting than the execution here, I’m curious how they rendered it at such a high resolution (probably upscaling) and how long it took to produce this whole bideo @ 60fps

behold the Cyber Twink